10 Minute Consultation: Dr Imran Rafi

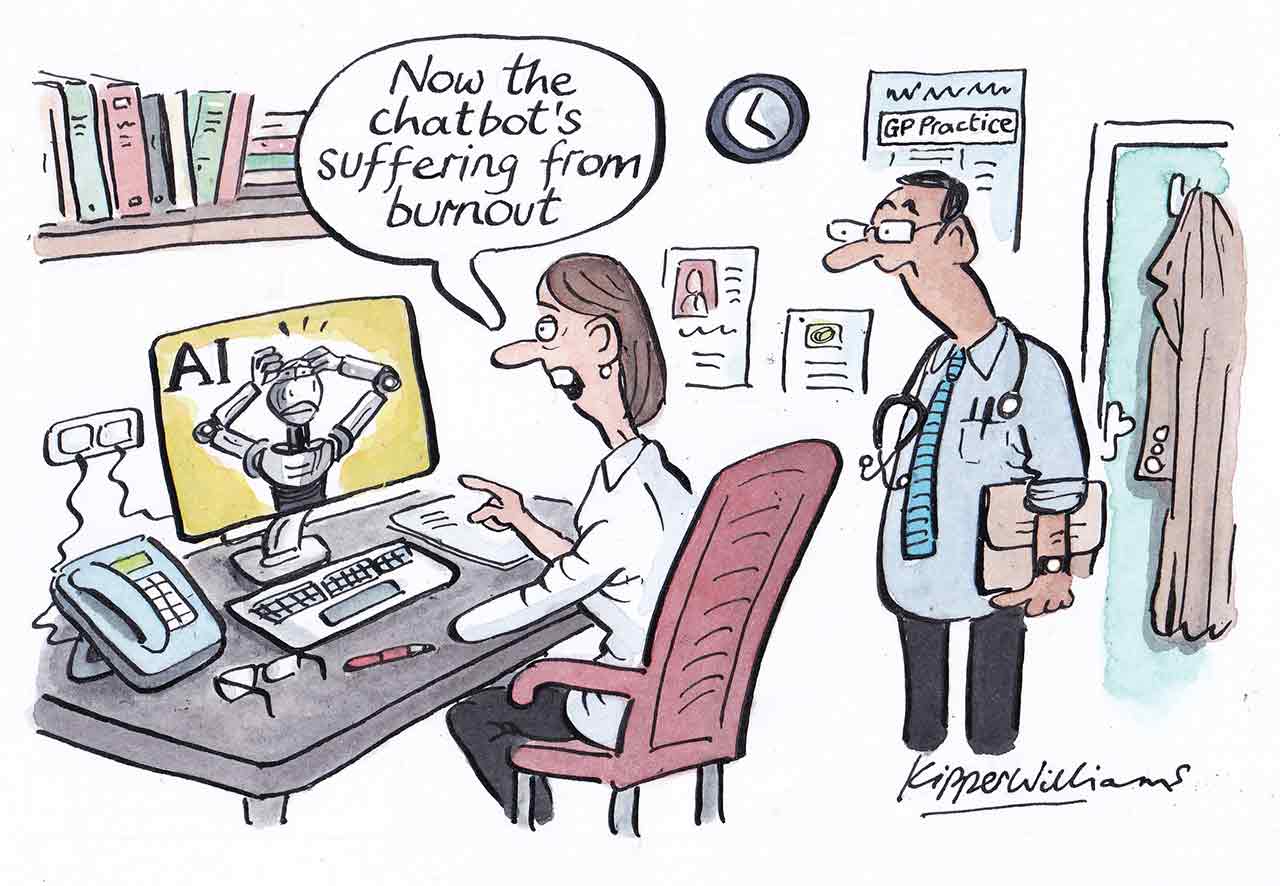

Dr Ben Brown explores the role of AI in primary care and how it can ‘enhance efficiency’ without ‘replacing humanity’

With companies like IKEA, Duolingo, and Microsoft using artificial intelligence (AI) for roles traditionally held by humans, healthcare faces a pivotal question: can AI be our ally rather than our enemy?

As a general practitioner with a background in developing AI tools, I've observed a range of reactions within the medical community - from excitement to scepticism - regarding the adoption of AI in clinical practice. I believe that AI should not be viewed as a threat, but as a collaborator with potential to enhance patient care and make our lives easier.

Decoding AI: what is it?

There are many different definitions of AI. My favourite is from Richard Bellman who described AI as the automation of activities “we associate with human thinking, activities such as decision-making, problem-solving, learning…”

AI has been around since the 1950s and initially relied on being programmed by humans to perform tasks. By the 1990s, it shifted towards a more data-driven approach called machine learning. Here, computers ‘learn’ to perform tasks on their own by analysing data on how those tasks were done historically. These advancements have led to the creation of AI tools like ChatGPT and Gemini, which are capable of generating new novel outputs like prose, images, music, or videos.

The role of AI in primary care

I think of AI being able to broadly perform two types of tasks:

- Tasks humans can do already but for longer periods, faster, cheaper, on a larger scale, and with less variation.

- Tasks humans cannot do well like interpreting millions of data points simultaneously or accurately predicting the future

In primary care, the first type of task may include things like patient triage, filing blood results or letters, or transcribing and summarising telephone or in-person consultations.

The second type of task may include things like calculating the prognosis of a disease, a patient’s risk of hospital admission, or their response to treatment based on their genetic profile.

Adopting AI in clinical practice

Introducing AI into clinical practice presents many challenges. However, first and foremost, it needs to be both verifiable and useful to those who use it. From my experience, this is closely linked to the type of tasks the AI can perform.

AI tends to be more readily adopted when it performs tasks that humans are already capable of, especially when their outputs are related to the current state of the world. This is because a human can immediately verify the AI’s performance and also benefit from its action.

In primary care, an example includes patient triage and care navigation. A person can instantly verify if the AI has made the correct triage decision. At the same time benefit is felt by the patient quickly receiving treatment from the most appropriate service, which may be outside the GP practice, like a pharmacy.

AI may face more challenges in adoption when it performs tasks humans struggle with, particularly when its outputs relate to the future state of the world. This difficulty arises because it can be difficult to verify how well the AI has performed when we can't immediately see the results, and when we may have little control over the future.

For instance, in primary care, this could be predicting a patient’s risk of hospital admission. Such predictions concern events that may not happen for months, making it difficult to verify the accuracy of the AI's assessment. We may also be unable to take any preventative action in the present to stop the patient being admitted to hospital.

A further challenge is that AI is usually classified as a medical device when used in health care, and should therefore comply with medical device regulation. This requires that AI tools be scientifically validated and clinically safe before they are used in practice. This can be particularly difficult for generative AI, which produces a wide variety of outputs and is frequently updated by its developers. Consequently, designing a robust study to evaluate its accuracy might be problematic.

For these reasons, GP practices should always review the product documentation and accuracy reports when adopting new AI tools.

Balancing innovation with tradition

But fear not – despite AI's potential to transform primary care, we humans are still needed. AI outputs require interpretation. They need incorporating into holistic care plans to meet each patients’ unique needs. These plans need communicating in an empathetic way to patients, whilst explaining their risks and benefits. These are areas where GPs excel.

AI’s value is in performing tasks that improve our efficacy and free up time for us to care for our patients. Like Charles Friedman wrote, “a person working in partnership with an information resource is ‘better’ than that same person unassisted.”

In this new world of AI, our tasks will get automated, but people won’t; our jobs may change but they won’t get replaced.

Dr Ben Brown is a GP partner in Salford, a Clinical Senior Lecturer at the University of Manchester and Chief Medical Officer for Patchs Health

Read more

Thank you for your feedback. Your response will help improve this page.